With an increasing number of businesses worldwide, your website needs to rank high on SERPs to capture the attention of maximum target customers. Thus, you should work hard on technical SEO; otherwise, your website fails to rank, and you can’t reach potential customers. Professional SEO services can always be a great investment to identify and fix common technical SEO issues and mistakes.

Still, you should be aware of the most common reasons your website is not getting the desired search engine rank. Here, you will learn what technical SEO is, its importance, and the key SEO mistakes that may be ruining your website’s performance in search engine results.

Keep reading.

What is Technical SEO?

Technical SEO is the process of optimizing websites and servers that helps search engine spiders crawl and index your website more quickly to boost your organic rankings. It is the way to configure some elements of your website to index it for the web.

This process involves all the basics and advanced concepts such as website framework, indexing, sitemap generation, crawling, page speeds, migrations, core vitals, and others. If you want your website to rank high on SERPs, you should work on technical SEO to get the desired results.

What is the Importance of Technical SEO?

Your website may have the best content but if technical SEO is not up to the mark, you will not rank on the SERPs. Top-notch web content can never do wonders if no one can find and see it.

Search engines are improving at crawling, indexing, and understanding the content of a website. But they are not yet perfect. Thus, you need to have enriching website content with a firm technical SEO base. If you implement the most effective SEO strategies, it generates leads and boosts conversions and sales.

Technical SEO is crucial because your website will be imperfectly indexed without it. With improper indexing, Google, Yahoo, Bing, and other search engines can’t understand when to display your website on SERPs.

When you conduct a technical SEO audit on your website, it ensures that you have a perfect framework so all your efforts on your website get indexed and ranked.

Now, let’s discuss the most common technical SEO mistakes that your website may have and how to fix those errors.

What are the Most Common SEO Mistakes and how to fix these SEO issues?

Here is the kind of technical SEO mistakes that your website tends to have:

Duplicate Content

Google eternally loves unique website content. Thus, duplicate content can be a significant mistake if you want to rank on the top of the first page of Google search results.

Keep content unique and fresh consistently updating your website and posting blogs frequently. As big modifications are a better signal to Google than smaller changes, you should try to make huge changes when upgrading your website content. Besides adding blogs and web pages, you must also create supplemental content, which adds value to the searcher and your target customers.

Here are the different types of content you can add to your website:

- Infographics

- Whitepapers

- Ebooks

- Downloadable templates

- Interactive tools or maps

- Videos

After drafting the website content, use SEMRush and Deep Crawl to discover duplicate content. The duplication can occur if someone else reposts your content on any other URL or if you have similar content posted on a web page and a blog or any other posts. Such tools help you to crawl your and other websites on the internet to find if the content is similar to any previously-posted content.

To prevent content duplication, ensure all web pages are unique. Every web page needs its meta description, title, URL, and H1/H2/H3 headings. The H1 heading should be noticeable and contain primary keywords. Make sure you add a direct keyword to every section of your webpage to improve SEO depending on the keywords’ potential.

Be careful when reusing ALT tags that are related to those images. Though such tags must contain keywords, they cannot be the same. You should be innovative when adding the keywords and be different enough to not index your tags as copied content.

Perfect keyword placement is important to help Google understand the topic of your web page. So, add the keyword/phrase you target to the title tag, at the start of the page content and throughout the rest of the website, which works exceptionally well for your SEO. You should always write your content for human beings but not search engines. The content on every page should seem to be natural rather than stuffed with keywords only to attract Google web crawlers.

You should always be careful with keyword stuffing, otherwise, your content seems to be spammy and Google may penalize your website for that reason. So, you should always aim to build relevancy signs for Google but not overdo that.

If visitors are quickly leaving your website, also known as bouncing, Google can determine that your website content is irrelevant to the search query used to discover your website online. Thus, your content needs to be engaging and deliver the perfect message. If visitors spend time on your website, then you must be offering value, which Google wants to share with new customers.

Domain History and Registration

The domain is another crucial thing to consider to improve your technical SEO performance. A website, which repeatedly ranks poorly with Google may have a poor domain history. Penalties, such as Panda and Penguin identify abusive strategies to boost website ranking, which adversely affect your SEO performance. Host with spammers implement “black hat SEO tactics” carefully. Otherwise, Google recognizes this later and discards your website.

Update your domain and try to buy longer domain registrations. Google considers a longer registration more legal than shorter ones. The search engine always gives more credit to websites, which they think will continue to stay for many years in the future. Domains with short-term registrations seem to be less authoritative as they may not be consistently maintained or are for a temporary period unlike a well-established website on a similar topic.

When choosing a domain, add the keyword and if possible, list the keyword as the first word in the domain. If a domain has been earlier registered, you should not worry and try including a keyword in the subdomain. For instance, attempt to set up a page domain, like “www.seo.website.com” rather than “www website.com/seo”.

You can use an excellent plugin(like Yoast SEO) to optimize web pages precisely and naturally. This plugin can guide you on the easiest ways to read your web content and understand how many times you should use keywords/phrases on every page or check if the images are optimized. To work on WordPress SEO as required, you can use the tool Rank Math.

Moreover, you should look for duplicate content in your structured schema or data. Many businesses tend to overlook this website element for which your search engine rank deteriorates.

Google offers an advanced Schema Markup tool that helps to check that no duplicate content is displayed in your schema.

No Meta Descriptions in Web Pages

A web page’s meta description is a brief snippet (the two gray-colored lines of text in the image below) that summarizes your webpage content.

Search engines usually display them when you search for phrases that are included in the description. Due to this reason, you will have to optimize your website meta descriptions for SEO purposes.

Always add a meta description of approximately 150 words to your web page. If you have a WordPress website, a meta description appears at the bottom of the published page. Include significant keywords to your may descriptions before the cut-off on SERPs display. Most of the time, websites without duplicate meta descriptions ruin their SEO performance.

Weak Internal Linking Framework

Your website needs to have a strong internal linking framework to rank high on SERPs. If you don’t strategize ideally, it can cause some critical SEO issues.

Your internal linking structure should be as effective as possible. Ensure your web pages link to each other via practical navigational links and optimized anchor text.

Broken Website Internal Links

Google relies on the internal links within your web pages when crawling a website for indexing purposes. If the internal links are broken, they won’t know where to move ahead.

Broken internal links also ruin your website’s credibility. No one would like to access your website if it is full of 404 error messages. You can use tools, such as Google Search Console and Broken Link Checker, to discover broken links. When you use these tools, you can enter a URL and they will show all broken links and 404 errors. Once you learn where the broken links exist, you can delete or fix them.

Broken Backlinks, Inbound and External Links

You need to ensure that you send the right signals to Google about your website. When you have poor quality or spammy internal links, Google will consider this as altering the search engine ranking system and penalizing your website. All your link building should be natural as authorized websites with a high level of trust that usually help websites rank higher as they offer quality resources to readers.

To improve your link-building strategy, you can use different tools such as Screaming Frog SEO Spider, Ahrefs, and Moz browser extension. Internal website linking is equally as important as building high-quality internal links to Google. To ensure your website has a sufficient amount of inbound links directing traffic to relevant web page content. For instance, add a link to your Contact page or if you specify a service you offer in a blog/article, add a link to that Service page.

You should also check and fix broken links as they may send a poor quality signal to Google, which decreases your website presence in search engine results. Once Google finds a website, which has one link from a blog on a relevant topic and then another link from a website that is not very closely related, it assumes that the first website is more authoritative and better than the second. You can easily create your “link juice” by acquiring links from high-authority websites.

Like internal links, you must not want intended links to direct your website to lead to an error message. If your website lacks external links, it decreases the total number of web pages that display in search engines.

You can also use tools, such as Google Search Console and Broken Link Checker, to scan your website and find external broken links. It’s not that easy to fix broken backlinks as these are hosted on external websites. To solve the broken website external linking issues, you can directly contact the website from where the link came from and request them to remove it.

Suspicious Link Building Strategies

Link building campaigns, if run effectively, can improve your search engine ranks. When you try to build links suspiciously, Google will charge you penalties.

Never implement black hat link-building like strategies. You will get many links implementing that technique but they will be of poor quality and won’t boost your search engine rankings.

Some of the other suspicious link scheme behaviors are buying or selling links and automated programs/services.

Excessive Nofollow Exit Links

Nofollow links are used for linking to untrusted content, building paid links, and prioritizing crawls. Besides these purposes, you should never overuse no follow in your external links.

Certain websites use nofollow to prioritize internal spider crawling. But keep in mind that Google does not prefer this technique.

Lowercase vs UpperCase URLs

You can face SEO issues of different sizes, shapes, levels, and circumstances. Websites using .NET tend to face a lot of SEO issues.

Servers usually do not always redirect websites using the lowercase to uppercase URL. If your website tends to face different types of SEO issues, you can use rewrite models to fix them.

Web Pages Complicated URLs

When the web pages have automatically generated URLs, a website’s search engine friendliness doesn’t matter for technical SEO performance.

Thus, you will end up with messy and obscure URLs like “index. p hp?p=367595.”. Such URLs are neither pleasant nor SEO friendly. So, you should try to clean them up and add relevant keywords to URLs.

Query Parameters at the URL Endings

Have you seen excessively long URLs? The extra length occurs when some filters(such as size and color) are included in URLs., which deteriorates the performance of eCommerce websites. This is quite a common issue with many websites. The query parameters cause duplicate content most of the time as well.

Query parameters at the end of URLs utilize your crawl budget. Thus, ensure to spend the required time cleaning up your URLs.

Imperfect Shift to a New Website or URL Framework

Website updates and shifts are critical steps to maintain the newness and relevance in your business. If you can’t manage the transition properly, you can go wrong with many things. The biggest mistake can be a huge loss in website traffic.

You should maintain a record of all website URLs, make sure there are no replicas and 301 redirects are properly directed. No matter what, you should always implement proper methods to migrate your website and retain the traffic.

Broken and Non-Optimized Images

You should always link an image to your website. Make sure you send users somewhere when they click all the images added to your website. The link may lead to other websites or any other page on your website.

Images, which are directed nowhere on the internet, will increase your website’s bounce rate and deteriorate your technical SEO. Even if you add links to all the images, you should check them frequently to ensure that website errors or domain modifications have not adversely affected your links.

Broken images are a common issue and tend to occur because of website or domain modifications or a change in files after posting on the web. If you identify any such issues on your website, you should troubleshoot them immediately.

As Google web crawlers cannot view images, they need to understand what the images on your website are all about. Make sure your image file name, title tag, and ALT text explain every image. Add a brief description and use text variations. Try to be a little more creative for your website using the same images across it.

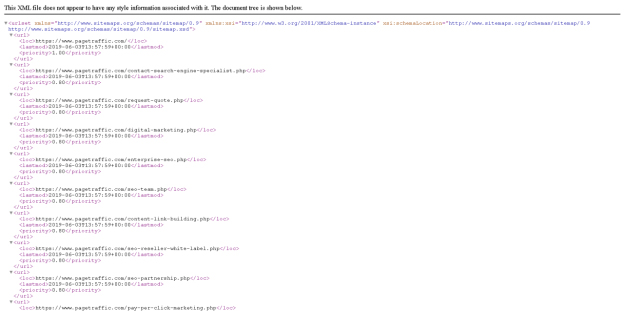

Broken, Missing, or Outdated Sitemap

Sitemaps are a crucial web element as they are delivered to Google and Bing Webmasters and help them with indexing your website. You can easily design a sitemap. Many web design and hosting websites can design a sitemap for you. Sitemap designing is an area where your technical SEO efforts may not bring the desired results.

Site index crawlers use a sitemap to find which page to go to next, almost like your internal links. When your sitemap is broken, missing, or outdated, the crawlers can’t understand where to go next.

When crawlers don’t know how to crawl your website content, they won’t be aware of how to index it. If the crawlers can’t index your website, it won’t rank high on SERPs.

Ensure your sitemap is updated and contains all modifications you have made to your web pages. Whenever you find it tough to create a sitemap, you can use a plugin like Yoast to do so.

XML sitemaps help Google search bots understand in detail your web pages so they can effectively crawl your website. Type your domain name and add “/sitemap.xml” into Google. This is where the sitemap tends to live. If your website has a sitemap, you will something like this below:

If your website does not have a sitemap and you end up with a 404 page, you can create one. If you can’t design a sitemap yourself, hire a web developer. The simplest option is to use an XML sitemap generating tool, If you have a WordPress website, the Yoast SEO plugin can automatically generate XML sitemaps.

Also, Read

The 15 Best Technical SEO Tools Every Webmaster Should Know!

What are toxic backlinks and how to remove them?

How to Block Part of a Page from Indexing by Search Engines?

Rel=canonical Usage in the Wrong Place

You can use rel=canonical to relate your one web page to another one. Their utilization improves credibility and mitigates content duplication without hurting search engine ranking. They tell search engines that the web pages with rel=canonical are the ones that need to be indexed.

But, if you use rel=canonical in the wrong places, it can cause certain confusion because of which top search engines may not rank your website at all. Check the code on all your web pages to find rel=canonical and ensure it is being used effectively.

Title Tag Complications

Title tags may have a variety of complications that adversely affect SEO, such as duplicate tags, missing title tags, and too short/too long title tags.

Your page title or title tags help both search engines and users determine what your website is all about. Due to this reason, they are a crucial part of the SEO process. To get the desired results in technical SEO, you will have to start with the basics.

Remember, your title tag should contain 50 to 60 characters and have at least one of two of your target keywords. To prevent technical complications, ensure your website has no duplicated content.

Besides the technical aspects, a clickable and perfect title should include numbers, dates, capitalization, and emotion.

Missing or Duplicated H1 Tag

Header(H1) tags are a crucial element of on-page SEO. Title tags and H1 tags are quite different from each other. While the former gets displayed in search results, the latter is visible to all your website users. So, you should write both of them with different approaches.

Make sure you don’t have over one H1 tag on a page. Many H1 tags tend to miss or get copied in the title tag. You should never miss or copy an H1 tag on a page. Add a unique H1 per web page.

To perform well in technical SEO, your H1 tag should include the keyword you target on the web page. It should be 20 to 70 characters long and precisely reflect the web page content.

Meta Refresh Utilization

Meta refresh is an outdated technique of redirecting users to another web page. Nowadays, most web developers go for 301 redirects. Google doesn’t recommend using the meta refresh and ensures that it won’t have similar benefits as a 301.

According to Moz, meta refresh tends to be slower and not a recommended SEO strategy. They are most commonly related to a 5-seconds countdown with the text,” If you are not redirected in 5 seconds, click here.”

Meta refreshes pass some link juice but are not a recommended SEO technique because of poor functioning and the loss of passing link juice.

Extra-long Web Pages

The unnecessary long web pages slow down your website speed, which significantly harms your SEO. Information related to location, terms, and conditions can be intended for a single page but end up embedded in all website pages.

Use a tool, like Screaming Frog, to scan your website. Check if you have the expected word count and no hidden text.

Wrong Language Declaration

As a business owner, you will want to deliver content to the right target customers, including those who communicate in and understand your language. Many brands tend to overlook a website’s language declaration as a technical SEO aspect.

Make sure you declare your default language so Google can always translate your web page and identify your location. Remember, your location can affect your both technical SEO and international SEO.

Verify your country and language inside of your website source code to understand if you made a proper declaration.

No ALT-Text Tags

ALT-text can benefit your technical SEO in two ways. First, they make your website more accessible. Visually impaired people who visit your website can use ALT tags to learn what and why the images are on your website.

Second, ALT tags add more spaces to text content that you can use to rank your we sure on SERPs. So, use proper ALT tags if you want to improve your technical SEO performance.

Improper Use of 301 and 302 Redirects

You should learn the differences between 301 and 302 redirects to use them effectively as and when required.

As a 301 redirect is permanent, you should use it when you plan to permanently redirect or replace a page to another location. This kind of redirect will help the search engines know that they can pause indexing or crawl this web page.

Since a 302 redirect is a temporary redirect, this code helps the indexers learn that this page is presently going through certain changes but it will return online soon. 302 redirect helps indexers learn that they should continue indexing or crawling this web page.

When you plan to permanently redirect or replace a web page, use the right type of redirect so search engines don’t continue to index or crawl a page that you don’t use.

No Use of Custom 404 Pages

Anyone may link to your website with an invalid URL. It occurs on most websites and tends to cause severe SEO issues during the process.

Whenever someone links to your website with a false URL, don’t show a general 404 error message with a white background to the visitors. Instead of doing that, show them an easy-to-understand 404 error message.

Even though a web page doesn’t exist, you can still use your design and color scheme. You can also add a link to your homepage so users can search for the article or web page they were expecting to access.

Soft 404 Errors Use

As soon as a search engine sees a 404 redirect, it knows to stop crawling and index that specific web page.

But if you use soft 404 errors, your website returns a code 200 to the indexer. This code tells the search engines that this specific web page functions the way it should. When the search engine thinks that the page is functioning correctly, it will keep on indexing it.

Server Header With a Wrong Code

Make sure to check your Server Header when conducting your technical SEO audit. You can find multiple tools on the internet that will function as a Server Header Checker. These tools tell you what status code is returned for your website.

Web pages with a 4xx/5xx status code are marked as problematic websites. Thus, search engines shy away from indexing them. If you discover that the server header is returning a pro men code, you will have to access the backend of your website and fix it so your URL status code gets positive.

Less Text to HTML Ratio

Indexers prefer content that loads fast and easily. Copy and text make it simpler for indexers to know what your website is about and which keywords the content relates to.

If you make the web content too confusing and complicated, search engines won’t try to find it out. Search engines won’t index the web pages that confused them. When your website has a lot of backend code, it loads too slowly. Ensure that your website text supersedes HTML coding.

Excessive coding tends to be quite a big problem for technical SEO. However, the problem has easy solutions like removing extra code or adding more on-page text content. You can also block or delete any unnecessary or old pages.

Non-indexed Web Pages

When you have properly indexed web pages, you save yourself from a lot of troubles. Before starting to evaluate your possible SEO issues, check your website’s search engine rank. All you need to do is perform a simple Google search.

Type your website URL into the Google search panel to find the top-ranking web pages. If you rank on the top portion of SERPs, that’s good for your SEO.

In case, any webpage you would like to rank is missing on the SERPs, then you need to dig deeper into the issues. To check indexed and non-indexed web pages, type “site:yoursitename.com” in Google’s search bar and immediately see the total count of indexed pages for your website.

If your website is not indexed at all, you can start by adding your URL to Google. If your website is indexed, you get many more or fewer results than your expectations. Look deeper for website old versions or hacking spam which are indexed rather than perfect redirects in place to direct to your updated website. You can conduct an audit of the indexed content and compare it to the web pages you want to rank.

When you can’t understand why the content is not ranking, go through Google Webmaster guidelines to make sure your website content is compliant. If the results are different than you expected in any form, check that your vital web pages are not blocked by the robots.txt file. You should also check that you have not implemented a NOINDEX meta tag by mistake.

Inappropriate Noindex Code

A misplaced index is a small coding that can severely ruin your technical SEO. If you use Noindex, it will direct search engines not to index your website. This mistake tends to occur in the web development phase before your website is live. But, you should remove the inappropriate no index code.

If you don’t remove it on time, search engines may not index your website. In case your website is not indexed, it won’t rank high on the SERPs.

Robots.txt File Error

A robots.txt file error can severely affect your technical SEO. You need to pay attention to such errors when you run down your technical SEO checklist. Anything as seemingly unimportant as a misplaced letter in your robots.txt file can do huge damage to and incorrectly index your page.

You should be or ensure your web developer is careful when ordering your file. You can have the right listed commands, but if they function together properly, it can cause the crawling of unintended URLs.

You should look for a misplaced “disallow” as it will signal Google and other search engines not to crawl a page containing the disallow that would prevent it from being correctly indexed. To evaluate the strength of your robots.txt file, you can use the text tool inside of the Google Search Console.

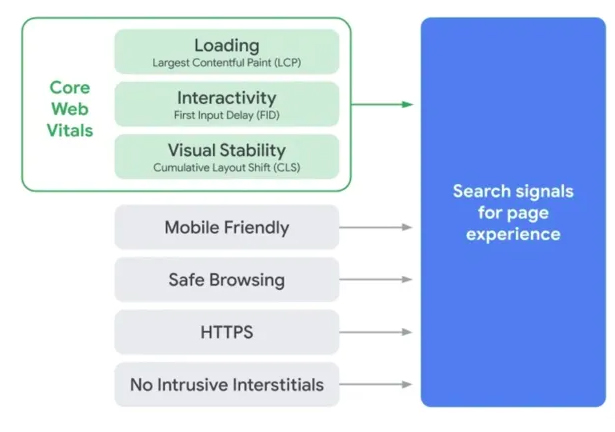

Poor Core Web Vitals

When it comes to technical SEO, core web vitals are quite a new thing to consider. However, you should not overlook this Google tool as it will mainly tell you how your website is functioning(see the image below). It checks if your website loads fast are safe and user-friendly.

You should aim for Google to approve of your core web vitals so it helps your website to rank high on the SERPs. When Google feels it can’t trust your website, it won’t recommend it to users.

Slow Loading Website

A website that loads slowly does not tend to rank high on SERPs which reflect a poor quality signal and causes certain big SEO issues. Due to your slow website, you lag behind your competitors.

Google said that they and their users value website speed a lot. If your web page loads slowly, your customers will quickly leave your website that increasing the bounce rate. So, they have decided to consider website speed for search engine ranking. They use a variety of sources to assess the speed of a website as compared to other websites.

The slow website loading speed can hurt your technical SEO. Fortunately, you can monitor site speed, identify and fix any issue immediately.

To learn the website speed that you should aim for, SEMRush combined the results from research in the following points:

- If your website loads in 5 seconds, it is quicker than around 25% of the websites.

- If your website loads in 2.9 seconds, it is quicker than around 50% of the websites.

- If your website loads in 1.7 seconds, it is quicker than around 75% of the web.

- If your website loads in 0.8 seconds, it is quicker than around 94% of the websites.

Here are some of the tips to boost your website speed:

Enable Compression

You need to consult your web development team regarding the compression. As the activity tends to involve updating your web server configuration, you should not try to do it on your own. But, you should enable compression to improve the speed of your website.

Optimize Images

Many websites have images of 500k or more in size. You can optimize some of these images, so they are much smaller in size without compromising the image quality. If your website has a few bytes to load, it will serve the web page faster.

Use Browser Caching

If you use WordPress, you can access a plugin that helps you to use browser caching. Users will feel like revisiting your website as they will load resources (like images) from their hard drive rather than across the network.

Access a CDN

A content delivery network(CDN) delivers content immediately to vistas by loading your website from a node near their location. But there is one disadvantage, i.e., the cost involved. CDNs are quite expensive. However, if you are concerned about UX, you should invest in CDN.

Poor Mobile Experience of Your Website

As almost everyone uses a smartphone these days, your website needs to be optimized for mobile devices to increase customer outreach.

In 2016, Google declared its motive to initiate mobile-first indexing by saying, “To make our results more useful, we’ve begun experiments to make our index mobile-first. Although our search index will continue to be a single index of websites and apps, our algorithms will eventually primarily use the mobile version of a site’s content to rank pages from that site, to understand structured data, and to show snippets from those pages in our results.”

Mobile web browsing is growing popular with every passing day. If you have not optimized your website to be compatible with smartphones and other mobile devices, you should do it right away. When people can access all your content via smartphones and other handheld devices, it automatically increases your customer outreach.

To optimize your website for mobile devices, you should consider design, framework, flash use, page speed, and other crucial elements of your website.

Poor Website Navigation

When users can’t navigate your website easily, they tend not to engage which decreases its utility to visitors. Due to poor navigation, search engines may consider your website to have a low authority that will significantly degrade your search engine rankings.

No HTTP Security

As web security is always on people’s minds these days, all indexed websites use HTTPS these days. Website security with HTTPS is now more important than ever. Google now marks any non-HTTPS websites as non-secure if they need passwords or credit cards.

If your website is not secure, it will display a grey background when you type a domain name into Google Chrome. You can also see a red background with a “Not secure” warning(see the image below) that is worse than the grey background. Due to this, users can immediately navigate away from your website back to the SERP.

To fix this issue quickly, you should check if your website is HTTPS. Simply type a domain name into Google Chrome. If you see the “secure” message, your website is secure. To convert your website to HTTPS, you require an SSL certificate from a Certificate Authority. Once you buy and install a certificate, your website is secure.

Search engines would never want to ruin their credibility by sending users to non-secure websites. If your website is unsecured, Google won’t index it. When it comes to improving your local SEO, using an HTTP website can be a big mistake.

No Use of Local Searches and Structured Data Markup

Google recognizes that local searches drive numerous search engine queries. Thus, you need to build a presence on search data providers( such as Facebook and Yelp) and Google My Business page. Ensure your contact details are consistent on all web pages.

Structured data is an easy way to help Google search crawlers to understand your website content and data. For instance, if your web page comprises a recipe, an ingredient list will be the perfect type of content to feature in your website in a structured data format. These structured data then get displayed on the SERPs in the form of rich snippets which add a visual appeal to your SERP listing.

Before creating and posting new content, look for opportunities to add structured data to the web page and coordinate the processes between content developers and your SEO experts. When you use structured data in a better way, it boosts CTR and tends to improve your search engine rank. After you implement structured data, review your Google Search Console report every day to ensure Google is not reporting any problem related to your structured data markup.

You can use Schema Builder to create, text, and implement structured data with a user-friendly point-and-click interface.

Home Page Multiple Versions

Duplicate content creates a problem in performing well in technical SEO. But the problem gets more intense if you have a duplicated homepage. You will have to make sure that your homepage doesn’t have multiple versions(such as “www”, ” non-www”, and “.index.html versions).

You can easily find if your Home page has multiple versions by only Googling your website. The results you get tell you if the web page versions are indexed or live.

When you discover your website’s multiple versions, add a 301 redirect to the duplicate page for pointing search engines and users in the direction of the right home page.

No Use of Breadcrumb Menus

If you run an eCommerce website with multiple categories and subcategories, adding breadcrumb links to your web pages can be a smart decision. You must have seen breadcrumbs as you have browsed across cyberspace.

Breadcrumbs look like this below:

Categories > Electronics > Mobile Devices > Smartphones

As each of these words or phrases is a link, search bots can crawl them and those links. Furthermore, bread crumbs also simplify the website visitors. Sometimes, they will upgrade one or two levels and browse your website taking a different route.

Less Word Count

Brevity and simplicity hold a high value in digital marketing. But too little text can harm your technical SEO significantly. Google tends to rank web content with depth higher on SERPs and longer when pages tend to reflect that.

So, try to write and add more long-form articles(ranging from 1500 to 4000 words) across your website for better search engine results.

Google Penalty

No business wants Google to penalize them. Google said that they use more than 200 signals to rank websites. Sometimes, penalties affect those rankings. If Google has penalized your website, you should take an action before it displays anywhere on the search engine result pages again.

First, you should learn as much as you can regarding why this has happened and how you can fix it via resources like a blog post. Google Search Console generally has a disavow tool, which you can use to tell Google which links must no longer be related to your website.

Your website may be failing to consistently rank on Google SERPs because of several reasons. Even if you learn more about them, you will not always get immediate knowledge on how to fix those issues. But if you conduct a little research and put in some effort, you can walk on the right path.

Required Citations in Local Search Results

Citations are crucial for your business to build the credibility of the local listings. Google conducts an algorithm update that impacts citations on numerous websites, including those which have been optimized by brands selling citation services or even SEO agencies themselves.

So, why does this happen? Most of the time, these kinds of companies will not offer a link back from their website to you with consistent information regarding what is optimized. Google will penalize these kinds of listings as the search engine doesn’t trust them as much as those listings, which have been properly optimized. Your website may not be ranking in Google as you are not cited correctly and thus, you should work on citations to improve your search engine rank.

Google New Algorithm Update Release

Have you ever checked websites, like Moz, which posts updates directly from Google? If you have checked such websites, then you must have seen a big update release at least once a year.

So, how does the release of a new Google algorithm update affect your technical SEO? The updates tend to target spammy links, backlinks, and social media profiles. If you have a website that is spammy in any form or shape, the next big Google update may impact it significantly.

The Bottom Line

If your website consistently fails to rank high on SERPs, it’s high time to work on your technical SEO. You may have the best website, but not just can’t afford to stay behind your competitors only for poor SEO performance.

It’s not that easy to spot technical SEO issues. Hopefully, this post will help you to learn the most common technical issues and how to fix them.

Once you notice any of the issues mentioned above on your website, you need to solve them at the earliest. Get in touch with a leading SEO service provider who can help you to fix these issues.

Frequently Asked Questions

What are the common technical SEO issues?

Here are some issues you should look out for:

● Broken links

● Duplicate content

● Slow page load speed

● Poor mobile experience

● Poor site navigation

● Missing Robots .txt

● Canonical issues

What is a technical SEO checklist?

Technical SEO is integral to any website’s SEO, and a technical audit checklist consists of all important technical parameters based on which a website should be analyzed and corrected.

How are on-page, off-page and technical SEO different?

Technical SEO is any work done outside of the content itself. While onpage refers to any work that is done on the website, and off-page tasks are accomplished beyond it.