In the online world, being unique is really important. However, when creating content, it is common to come across the challenge of duplicate content. If similar content appears on your website or another site, it can hurt the rankings on search engines and also make it harder for people to find you online.

It is important to find and solve duplicate content so that search engine crawlers can index your website and people can get new and useful information. Managing duplicate content well involves a smart plan that uses technical knowledge and content auditing tools.

Creating new and unique content not only helps prevent duplicate content but also makes your brand known for sharing new and interesting ideas. In this post, we’ll explore how to fix duplicate content issues, so you can improve your online strategy and make your brand stand out.

What is Duplicate Content?

Duplicate content means having the same information in different places, either on the same website or on different websites on the internet. This repeating information can happen on purpose or by accident and creates problems for people who own websites.

Search engines want to give people the best and different kinds of content when they search for something. When they find the same content in more than one place, they might have a hard time deciding which version to show first in search results.

This could make the affected pages rank lower in search results or even get penalties that make the website less visible. Copying information instead of creating original work can happen in different ways, including:

- Internal Duplication: It happens when a website has lots of URLs that lead to the same or very similar content.

- Cross-Domain Duplication: It happens when the exact same content is on different websites. This can be because the content is shared, copied, or distributed to other sites.

Hiring an expert content writer is crucial for enhancing your website’s SEO, ensuring high-quality, keyword-rich content that drives traffic and improves search engine rankings.

How Does Duplicate Content Affect SEO?

Lost Rankings

Duplicate content is bad for your website’s visibility on search engines because it can make your site rank lower and be harder to find in search results. When there are many pages on a website or on different websites with the same content, search engines may have trouble deciding which page is the most important to show in search results.

This can cause keyword dilution, where none of the pages rank well for specific keywords because the authority is spread out. Also, when there are multiple pages with the same content, they can compete with each other and make it harder for any of them to rank well in search results.

Search engines can punish websites that copy content on purpose. This can cause the website to lose its ranking or be removed from search results. Also, having the same content in different places can use up the time and resources of search engines, which stops them from properly indexing other important pages on the website.

By dealing with duplicate content issues ahead of time, websites can keep or improve their search engine rankings, make the website better for users, and make their overall online presence stronger.

Wasted Crawl Budget

Crawl budget is the amount of resources and time search engines use to look at and save pages from a website. If the same content is on different websites or web pages, search engines have to spend extra time and resources to look at it multiple times.

This wasteful use of crawl budget means that search engines may not spend enough time and resources to find and add new or updated content quickly. This could delay when people can see it in search results.

Furthermore, having the same content on multiple pages can waste the time search engines spend on crawling the important pages in your site. This can make it harder for important pages to show up in search results and for new content to be recognized quickly.

This delay can stop a website from getting better rankings for specific words and attracting natural visitors. If a website is not easy to navigate, it may not show up well in search engine results. This is because search engines may focus on less important repeated content instead of important and unique pages.

Dilutes Link Equity

Links are like a vote of confidence from one page to another, and having the same content in different places can make those votes less impactful. If the same content appears on several different websites, any links leading to those copies are also split between them.

This splitting of link power can make each copy page less important to search engines.

Search engines try to put web pages in order based on what they think is important and related, which is influenced by how many good links go to that page.

When there are many copies of the same content, the backlinks that could make one page very important are not as strong because they are shared among the duplicates. So, when there are multiple pages that are the same, none of them will have as many links to help them rank high in search results as one single page would.

Having diluted link equity can hurt how well a website shows up in search engines. Pages that are not seen as important by search engines may not show up high in search results for specific words. This can affect how many people visit a website through search results because pages that are ranked lower don’t get as many clicks and visits.

Tools to Find Duplicate Content

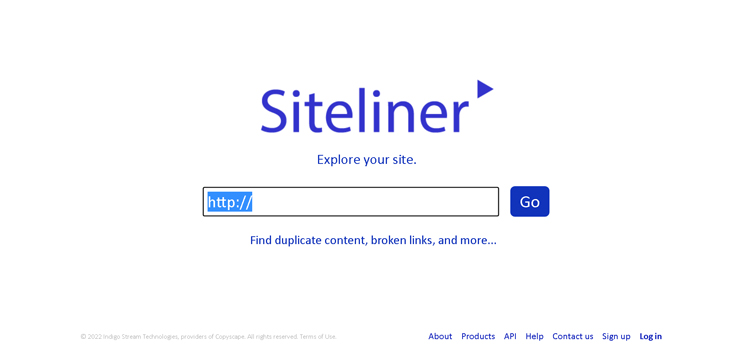

Siteliner

Siteliner is a helpful tool that finds duplicate content on different websites. It works by carefully going through a website and looking at each page to find if the same content is repeated within the website or if it’s repeated on other websites.

Siteliner gives detailed reports showing how much of the same content is found and points out which pages are affected. Once the same content is found, it helps you compare it and see how similar it is. This can help website managers figure out how much of the content is duplicated.

This helps to understand how big the issue is and gives ideas for fixing it. Suggestions frequently involve using canonical tags to indicate preferred URLs, creating 301 redirects to combine duplicate pages, or adjusting content to make sure it is unique.

By using Siteliner to check websites often, website managers can keep track of duplicate content, follow good SEO practices, and improve the overall quality of their site. This helps your website to show up more in search engines and makes sure that visitors have a good experience by finding useful and unique content.

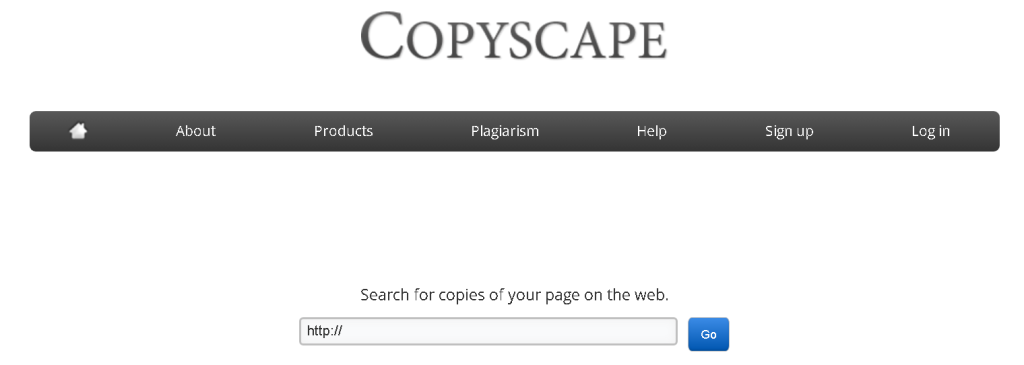

Copyscape

Copyscape helps website owners and content creators find if their content is copied by others on the internet. It also helps to improve how well the website shows up in search engines.

It works by checking online content to find duplicate information on the same website and on different websites. This complete scanning process makes sure that website owners can find any content that has been copied without permission or credit.

After the scan is finished, Copyscape gives a detailed report that shows how much content is the same and gives links to the pages that have the same content. These reports give important information about where and how content has been copied.

This helps users to make smart choices to protect their work and improve their search engine rankings. Copyscape not only finds copied content but also gives tools to fix the problems it finds.

People can decide to change content, ask to take down unauthorized copies, or use canonical tags to show which version of content is the most important. These actions help reduce the risk of having the same content in different places and also make sure that content is created and shared in a fair and honest way.

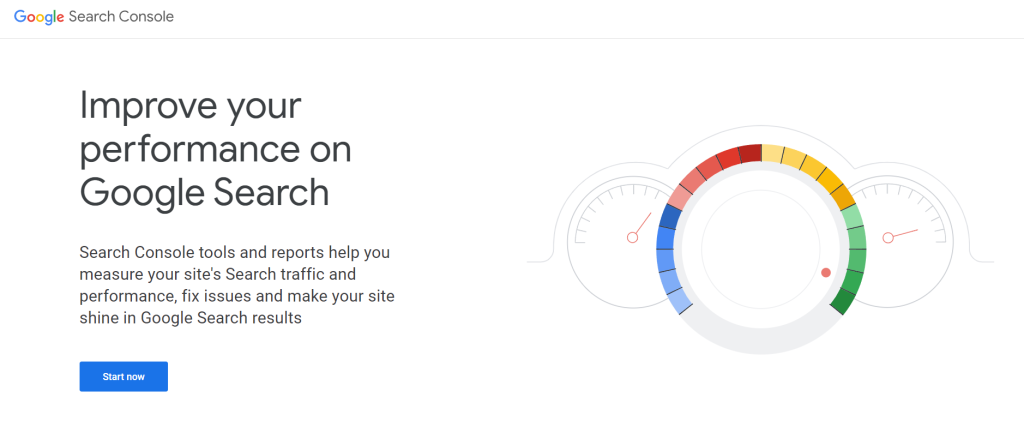

Google Search Console

Using Google Search Console to find duplicate content is an easy way for website owners to see if they have the same content on their site more than once. It helps them understand and fix any duplication issues. GSC has many tools to help find and manage duplicate content.

GSC’s Index Coverage report shows which pages on a website are not included in Google’s index because they have the same content as other pages. This report sorts out problems like having the same page more than once, so website managers can find the exact URLs that are affected by this.

GSC’s Enhancement section has a report on “Duplicate content”. This tool finds problems on a website where Google has found the same content on many pages. The report tells which pages have problems and gives ideas for fixing them.

GSC lets website owners choose their favorite version of a URL using a feature called “Canonicalization”. By telling Google which URL is the main one, webmasters can make sure that their duplicated content is listed and ranked in search results. This helps make sure the important page gets credit for its content and helps to combine the power of links.

Also Read: Which one is better, canonical or 301?

How to Fix Duplicate Content Issues?

Rewrite

The process of fixing duplicate content by rewriting involves making new and valuable content that is different from existing duplicates. This helps improve SEO and makes it more interesting for users.

The first step is to find pages or sections of content that are the same on a website or on different websites. Once they find it, website owners can change the content to make it new and different, update the information, or present it in a special way.

It helps website owners customize the information to better fit the needs of their audience, which can improve how long people stay on the website and how often they leave without clicking on anything.

Additionally, rewriting content can make the website look more important by showing unique ideas or perspectives that make it different from other websites. This can help you show up higher in search results and get more people to visit your website without paying for ads.

To rewrite content well, website managers should make sure the main message stays the same and also adds more value or talks about current trends and interests. By creating special and important content, websites can improve their SEO and become trusted sources in their industries.

301 Redirect

Fixing duplicate content with a 301 redirect means sending duplicate URLs to one main version of the content. This helps improve search engine rankings. This method works well when the same is on many different URLs on the same website or when content is copied on different websites.

To set up a 301 redirect, webmasters figure out which URL they want search engines to show in search results. This choice is usually made by looking at things like how good the content is, how related it is, and how well the URL has done in search rankings before.

Once the URL is decided, the website managers make all the other duplicate URLs go to this main one. A 301 redirect tells search engine crawlers that the content has moved to a new location.

Search engines combine the ranking signals of the redirected URLs onto the preferred URL. This transfers link power and makes sure that users are sent to the most relevant and trustworthy version of the content.

By using 301 redirects, website managers can reduce the bad effects of having the same content in more than one place on search engines, make their website structure simpler, and make it easier for users to find the right content.

Canonical Tags

Using canonical tags to fix duplicate content means telling search engines which version of a web page is the most important. This helps combine the power of backlinks and avoids getting in trouble with search engine optimization.

This method is helpful when there are many URLs with the same or very similar information, either on the same website or different websites. To use a canonical tag, website owners choose the main URL for duplicate pages in the HTML using the tag.

It tells search engines that the given URL is the main version of the content and should be listed and ranked in search results. It tells search engines to combine the ranking signals from duplicate pages so that the main page gets credit for its content.

Canonical tags are useful when there is the same content in different places, like when there are different versions of a page or similar products on a website. Webmasters can use a preferred URL to stop duplicate content from affecting SEO and user experience in a bad way.

They help search engines index the main URL instead of duplicate versions, which makes the indexing process more efficient. This improvement makes sure that the best and most trustworthy content shows up when people search online. This helps the website get seen more and brings in more natural visitors.

Meta Tags

Fixing duplicate content with meta tags means using tags like ‘noindex’ and ‘robots’ to tell search engines how to handle pages with duplicate content. This helps maintain good search engine rankings and improves the website’s performance. “

The “noindex” tag helps webmasters stop search engines from adding a page to their search results. When webmasters add “<meta name=”robots” content=”noindex”>” to the HTML head section of a copy page, they are telling search engines not to show the content in search results.

This helps to lower the risk of getting in trouble for having the same content and makes sure that the most important version of the content shows up first in search results. Instead, the “canonical” meta tag tells search engines which URL to choose when there are several URLs with the same content.

This tag helps improve SEO by focusing on one main URL for the content, which makes the website more authoritative and helps it rank better. Meta tags help solve the problem of having the same content on different parts of a website.

They let website managers customize their SEO plans for different cases of duplicated content. By using meta tags, website owners can help search engines to find the best content on their site, making it easier for users to find what they need.

Also Read: Boost Click-Through Rates: 55 Meta Description Examples that Win

URL Parameters

Solving duplicate content from URL parameters means using tools in Google Search Console or adjusting settings in your website’s CMS to control how search engines index different versions of URLs with parameters.

URL parameters are extra features added to web addresses to change what shows up on the page, tracking parameters or session IDs. If not handled correctly, these variations can cause problems with duplicated content.

In Google Search Console, website managers can choose how URL parameters should be used. This tool lets you change settings to decide if certain changes to the page are important enough for search engines to notice, or if they should be ignored.

Webmasters can choose a preferred URL and make sure search engines only use that one. This helps to avoid spreading authority across different variations of the URL. In CMS platforms like WordPress or Drupal, website managers can use plugins or settings to control URL parameters.

This means making sure that the website’s URLs are set up correctly, so that search engines can find and understand them easily. It may include things like adding specific tags to some pages, to stop search engines from showing multiple versions of the same page.

It could also mean changing the way the URLs are set up, to make sure they all look the same. This method helps search engines to find and show the most important and trustworthy content.

Content Consolidation

Consolidating the same content into one page helps improve search rankings and also makes it easier for people to find information. This method works well when the same content is found on different web pages of the same site or on multiple websites.

To bring content together, webmasters find all the same content on their website. This might involve checking content or using tools like Google Search Console or SEO crawlers to find duplicate pages.

Once identical copies are found, website managers choose which version of the content they want to keep. They combine the same information from different pages into one, making sure that the new page has all the important information for users.

The process of consolidation usually includes updating links on our website to send people to the main page, setting up redirects from duplicate web addresses to the preferred one, and using canonical tags to show search engines the main version of our content.

By putting all the content together, website managers can make the site easier to understand and navigation, make it easier for search engines to find and index the site, and focus all the importance onto one main page.

This method helps prevent getting in trouble for using duplicate content, and also improves SEO by combining strong content that helps boost search engine rankings and keeps users interested and happy.

Session IDs and Tracking Parameters

To stop having duplicate content showing up in different places because of session IDs and tracking parameters, we need to control how they affect the website address and make sure search engines don’t index multiple copies of the same content.

We add session IDs and tracking parameters to URLs to keep track of what users do on websites. If not handled correctly, they can cause problems with duplicate content and make SEO efforts less effective.

One good way is to set up how URL parameters are handled in Google Search Console or other similar tools. This helps website owners decide how certain parts of their website should be treated by search engines.

Webmasters can control how search engines interpret and treat URL variations by setting parameters to “no URLs,” “crawl,” or “index”. This helps them avoid duplicate content penalties. They can use URL rewriting or canonical tags to choose the best version of a URL with session IDs or tracking parameters.

This tells search engine crawlers which version of the content is most important and should show up first in search results. Keeping the URL structure and internal links consistent can reduce the effect of session IDs and tracking parameters on SEO.

Printer-Friendly Versions

Fixing duplicate content caused by printer-friendly versions means finding ways to control and improve these different versions while still maintaining the website’s search engine ranking. Printable versions of web pages are made to be easier to print.

Sometimes this can cause problems with having the same content multiple times if it’s not taken care of. One good way is to use canonical tags to tell search engines which URL we want them to index.

By choosing the original web page as the main version, webmasters combine ranking signals onto one important URL. This makes sure that the web page gets credit for its content in search engine results pages.

Website owners can use noindex meta tags on printer-friendly pages to stop search engines from including them in search results. This method makes sure that search engines only index the most important content and don’t penalize for having the same content in multiple places.

Another way is to use CSS that is easy for users to print. This will make it possible for users to print a version of the web page that is easier to read without needing a different web address. This method makes it easier for users to go back and forth between digital and printed content, without hurting SEO.

Also Read: How to Find the Right Content for Different Stages of Content Marketing Funnel?

How to Prevent Duplicate Content?

Plan Your Content

To avoid duplicate content and create a good strategy for your content, it’s important to have an organized approach. Begin by doing a lot of research to really understand who your audience is and what they like.

Customize your content to meet their particular needs and interests, making sure that each piece provides something special. After that, make a schedule to plan what to write about and when to publish it.

This helps to keep things consistent and avoid accidentally repeating the same topics or messages. When thinking of ideas, try to be creative and open to different points of view so that your work stays interesting and new.

Make sure to use the right words and phrases that people are searching for, so search engines can find your content easily. This helps people find things easier and stops the same information being repeated. Check your content often to find any copies or repetitions.

Update or bring together as needed to keep your website or platform clear and special. Make clear rules for creating and sharing content in your team or organization. This makes sure that everyone knows how important it is to be original and follow the best ways of managing content.

Use a Content Management System

To stop having duplicate content, it’s important to use a Content Management System (CMS). A CMS makes it easier to create, organize, and publish content, reducing the chance of repeating the same content on your website or platform by accident.

CMS platforms have tools that can keep track of changes made to content. This transparency makes sure that content creators can quickly find and fix any copied content.

Additionally, CMS systems usually have features that help organize and tag content.

These tools help organize content and make sure that similar topics are put together in a logical way without repeating the same information. CMS platforms usually have tools and add-ons that help improve the content for search engines.

This helps reduce the bad effects of having the same content on search engines by letting us create different descriptions, titles, and tags. CMS platforms help team members work together by letting them have different roles and permissions.

This makes sure that people who create and edit content follow the rules and standards. It helps to prevent problems with having the same content from happening because of not understanding or forgetting things.

Syndicate Carefully

To avoid duplicate content, it’s important to share content carefully. Syndication means sharing content on different websites or platforms, but this can cause problems if not handled correctly and lead to having the same content in more than one place.

Make sure you have permission to share the content. Don’t share the same content on different websites because it could lower your search engine ranking. Also, when sharing, think about making small changes to the content for each platform.

This might mean changing the titles, starting paragraphs, or adding new ideas for each group of people who will see it. By doing this, you can make your content more valuable and reach more people without repeating the same information.

Use canonical tags or references when sharing content. Canonical tags tell which version of content is the most important when there are many versions. This helps bring all the signals together and stops search engines from thinking the same content is repeated.

Keep checking the content you share to make sure it stays relevant. Keep your website up-to-date by changing or deleting old content that no longer serves a purpose. This will help to ensure that your website looks professional and doesn’t have the same information repeated multiple times.

Final Thoughts

Dealing with duplicate content is really important for keeping a strong and successful online presence. Webmasters can find duplicate content on their websites using tools like Siteliner, Copyscape, and many other tools.

Once you find the right version of a webpage, you can use strategies like canonical tags, 301 redirects, and content consolidation to make sure search engines prioritize that version and the links that go with it.

Regular checks and content management not only make SEO better but also make the website better for users by showing clear, helpful, and original content. Doing these things not only keeps you from getting in trouble with search engines but also helps to build trust with people.

This can help your site appear higher in search results and bring in more people over time. By always checking for and fixing any duplicate content, website owners can keep their site strong and competitive online.

FAQs

What happens if I have duplicate content on my website?

It can make your website rank lower on search engines, make it less authoritative, and it could get penalized by search engines. When search engines find the same content multiple times, they might only show one of them. This can make the other versions harder to find and reduce the number of people who see them in search results.

Does duplicate content always come from deliberate plagiarism?

No, duplicate content can accidentally appear for different reasons like URL parameters, shared content, printer-friendly versions of pages, or problems with the systems that manage content. Even though purposeful copying is one type of duplicate content, website managers should also deal with accidental duplication by carefully managing their content and using SEO techniques to make sure it’s original and follows SEO rules.

How often should I check my website for duplicate content?

It’s good to check your website often, especially after you make big changes to your website. This can include moving your website to a new address or changing the way your URLs are organized. Regular audits help find and fix new cases of copied content quickly. This makes sure your website stays in good shape for search engines, makes it better for users, and follows the rules.

How can we stop duplicate content from happening in the future?

To avoid repeating the same information on different web pages, website managers should concentrate on making original and useful content that gives something special to the readers. Regularly checking your content helps find and fix accidental copies, while using meta tags like noindex stops search engines from keeping copies of your content, saving resources and improving your search engine ranking.

How do I find duplicate content on my website?

You can use tools like Siteliner, Google Search Console, or Copyscape. Siteliner and Copyscape check internet pages for duplicate content, and Google Search Console shows where there might be duplicate content on your own website. These tools make reports that show when there is the same content in more than one place. They help website managers fix the problem by using canonical tags or consolidating content.