Rand Fishkin, CEO of SparkToro and founder of Moz, asserts that the Google Search Leak represents an unprecedented occurrence in terms of both scale and intricacy. He emphasizes that no comparable leak has surfaced from Google’s search division in the past twenty-five years. The discovery of over 2,500 pages disclosing Google algorithm factors disrupted the morning unexpectedly, contrary to expectations of a peaceful Tuesday.

Posts by Rand Fishkin and Mike King, CEO of iPullRank, made significant revelations regarding the Google Search Leak from Google Search’s Content Warehouse API. Data sourced on May 5th underwent meticulous scrutiny, cross-verification, and confirmation by Rand in collaboration with other SEO experts and former Google insiders over several weeks.

Despite efforts to evaluate the 14,014 leaked attributes (referred to as “ranking features”) documented in a GitHub repository and a thorough examination of Rand and Mike’s posts, the reviewer, a sole individual on their second day back from vacation, possesses limited technical SEO expertise.

However, recognizing the urgency of the situation, immediate attention is warranted.

Today, the focus is on examining the implications of the Google Search Leak for bloggers and charting a course of action.

Furthermore, the claims made by an anonymous source seemed extraordinary and encompassed various aspects:

- Google’s search team early recognized the necessity for full clickstream data to enhance search engine result quality.

- The desire for more clickstream data drove the creation of the Chrome browser in 2008.

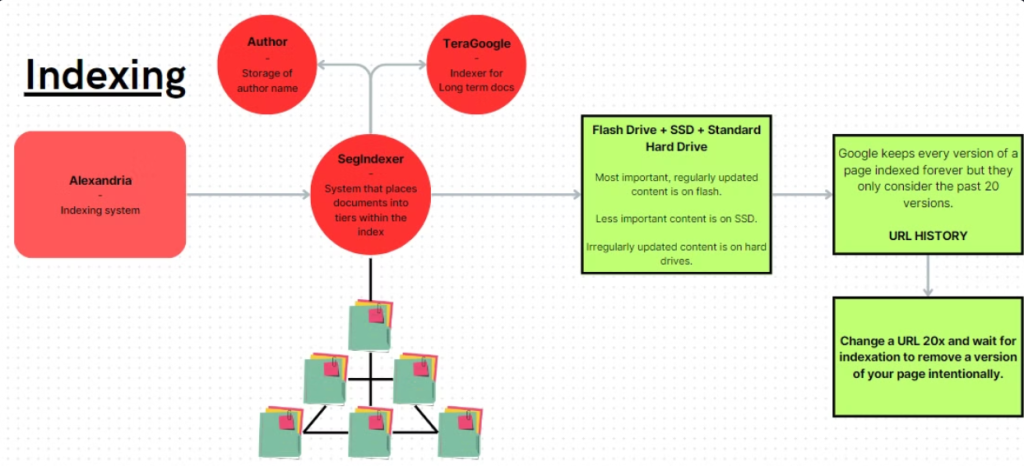

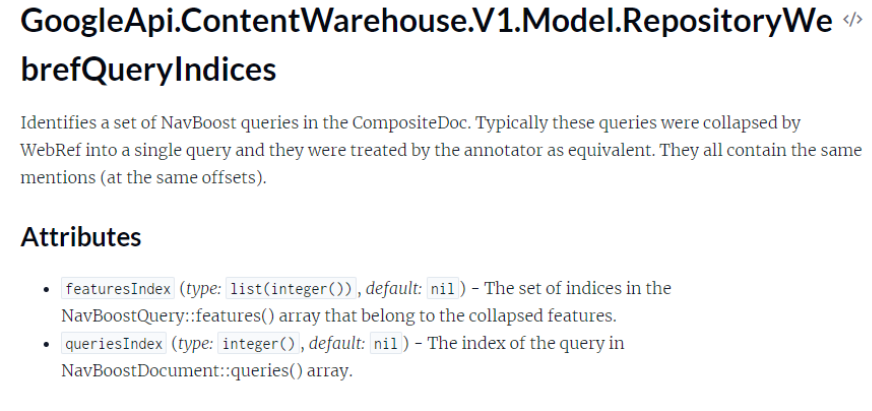

- Utilization of the NavBoost system to identify trending search demand and evaluate user intent.

- Google’s employment of various data sources, including cookie history and pattern detection, to combat click spam.

- Consideration of minor factors such as penalties for domain names matching unbranded search queries and spam signals during quality evaluations.

- Implementation of geo-fencing for click data and employment of whitelists during events such as the COVID-19 pandemic and democratic elections.

While some of these claims align with information disclosed during the Google/DOJ case, many appear novel, suggesting insider knowledge.

Following multiple emails, a video call was conducted with the anonymous source on May 24th. Subsequently, the source revealed their identity as an SEO practitioner and founder of EA Eagle Digital.

During the call, the source presented the Google Search Leak, comprising over 2,500 pages of API documentation from Google’s internal “Content API Warehouse,” uploaded to GitHub on March 27, 2024, and removed on May 7, 2024. Although the documentation lacks details on the search ranking algorithm, it offers extensive insights into the data collected by Google.

The source expresses hopes for transparency and accountability in Google’s practices, urging the publication of a post to disseminate the Google Search Leak’s findings and counteract purported misinformation propagated by Google over the years.

SEO service providers can use the insights gained from the Google search algorithm leak to improve their optimization strategies and align them with the newly revealed ranking factors. This can give them an advantage over competitors who are slower to adapt.

Furthermore, the detailed information from the leak can be used to enhance client reporting and consulting services, providing more accurate recommendations and demonstrating expertise in navigating the constantly changing SEO landscape.

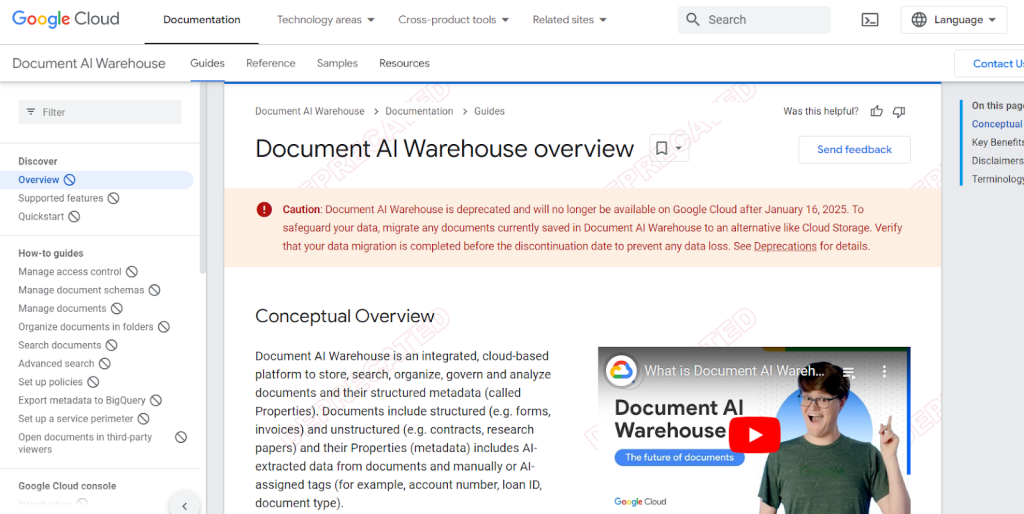

Document AI Warehouse

A crucial step in the process involved verifying the authenticity of the API Content Warehouse documents. The individual contacted former Google colleagues, shared the leaked documents, and asked for their opinions. Three ex-Googlers responded: one abstained from commenting, while the other two offered off-the-record insights:

- “I didn’t have access to this code when I worked there. But this certainly looks legit.”

- “It has all the hallmarks of an internal Google API.”

- “It’s a Java-based API. And someone spent a lot of time adhering to Google’s internal documentation and naming standards.”

- “I’d need more time to be sure, but this matches internal documentation I’m familiar with.”

- “Nothing I saw in a brief review suggests this is anything but legit.”

Additionally, @DavidGQuaid tweeted:

“I think it’s clear it’s an external facing API for building a document warehouse as the name suggests. That seems to throw cold water on the idea that the ‘leaked’ data represents internal Google Search information.”

As far as known at that time, the “leaked data” shared a similarity to what’s on the public Document AI Warehouse page.

Subsequently, assistance was sought in analyzing the documentation’s naming conventions and technical details. The individual contacted Mike King, founder of iPullRank, a prominent figure in technical SEO.

During a 40-minute phone call on Friday afternoon, Mike confirmed the suspicions: the documents appeared to be genuine, originating from within Google’s Search division and containing substantial previously unconfirmed information about the company’s internal operations.

Reviewing 2,500 technical documents over a single weekend was deemed an unreasonable task for one person. Nevertheless, Mike made his best effort. He delivered a detailed initial review of the Google API leak, which will be referenced further in the subsequent findings. Furthermore, he agreed to participate in SparkTogether 2024 in Seattle, WA, on Oct. 8, where he intends to present a comprehensive account of the leak with the benefit of several months of analysis.

Also Read: Google Search Algorithm Updates 2023 (Infographics)

Unveiling Google’s Deceptions: Insights From The API Documentation

It’s crucial to approach data with an open mind, especially considering the uncertainties surrounding its origins. For instance, without confirmation of whether this data stems from an internal Search Team document, it’s ill-advised to treat it as actionable SEO guidance.

Analyzing data to validate preconceived notions can trap one in Confirmation Bias, where information is selectively interpreted to align with existing beliefs. This bias can lead individuals to dismiss empirical evidence contradicting long-held theories, like the notion of Google’s Sandbox, despite instances of new sites ranking swiftly.

Brenda Malone, a Freelance Senior SEO Technical Strategist and Web Developer, shared her firsthand experience refuting the Sandbox theory. Contrary to Sandbox expectations, she indexed a personal blog with just two posts within two days.

In a tweet that has since been removed, John Muller addressed a query about the timeframe for becoming eligible to rank, asserting, “There is no sandbox.”

Considering Google’s track record of providing potentially misleading information, termed “gaslighting,” it’s essential to scrutinize their statements. While Google denies using metrics like “domain authority” or clicks for rankings, evidence suggests otherwise. Likewise, the existence of a “sandbox” and the utilization of Chrome data for rankings, despite Google’s denials, is revealed through documentation and internal presentations.

These inconsistencies underscore the importance of critical analysis and experimentation within the SEO community rather than relying solely on Google’s assertions.

Key Insights From The Leaked Documents

Complex Ranking Factors

Google’s algorithm evaluates various ranking factors, including:

- Traditional SEO Elements: Backlinks, keyword relevance, and on-page SEO remain essential.

- User Engagement Metrics: Click-through rates (CTR), dwell time, and pogo-sticking are crucial. SERPs’ user behavior is closely observed, and these metrics significantly impact rankings.

- Technical SEO: Factors like mobile-friendliness, site speed, and HTTPS security are vital. Ensuring your website is technically optimized for better crawlability and indexability is essential.

Content Quality and E-A-T

The importance of content quality is highlighted by the principles of E-A-T (Expertise, Authoritativeness, Trustworthiness). High-quality, authoritative content that provides genuine value is prioritized. Creating well-researched and credible content should be a primary focus for SEOs.

AI And Machine Learning Integration

Google’s use of AI and machine learning helps refine search algorithms by better understanding user intent and context. These technologies enable more personalized and relevant search results. Staying updated with AI trends and adapting SEO strategies to align with these advancements is increasingly essential.

Behavioral Signals And SERP Dynamics

User interaction with search results, such as the order of clicks and time spent on pages, impacts rankings. Optimizing meta descriptions, titles, and content to enhance user engagement can improve these metrics.

Also Read: Difference between an algorithm update and refresh

Practical Applications For SEOs

- Enhancing User Experience (UX): Given the importance of behavioral signals, improving UX should be a priority. This includes faster page load times, mobile optimization, intuitive navigation, and valuable content.

- Focusing on E-A-T: Build content that showcases expertise and authority. Achieve this by citing credible sources, obtaining backlinks from authoritative sites, and creating comprehensive, well-written content.

- Leveraging Technical SEO: Ensure your site meets technical SEO best practices. This includes using HTTPS, optimizing for mobile, improving site speed, and maintaining a clean, organized site structure.

- Adapting to AI Developments: Stay informed about AI advancements in SEO tools and techniques. Use AI-driven SEO tools to analyze data, predict trends, and automate repetitive tasks to stay competitive.

You can access the source of the leak here.

Google uses the page quality (PQ) metric, leveraging a language model to measure the “effort” required to create article pages and assess their replicability. Factors such as tools, images, videos, unique information, and information depth significantly influence these “effort” calculations, aligning with elements that enhance user satisfaction.

Topical authority is derived from analyzing Google Patents, with metrics like SiteFocusScore, SiteRadius, SiteEmbeddings, and PageEmbeddings crucial for ranking. SiteFocusScore indicates a site’s focus on a topic, while SiteRadius measures the deviation of page embeddings from site embeddings. This helps establish a site’s topical identity and informs optimization strategies to maximize page-focus scores.

ImageQualityClickSignals assesses image quality based on click signals, considering factors like usefulness, presentation, appeal, and engagement. Host NSR, computed at the host level, includes nsr, site_pr, and new_nsr, with nsr_data_proto being its latest version, although detailed information is limited.

Sitechunk analysis involves dissecting parts of a domain to determine site rank, reflecting Google’s detailed evaluation approach across pages, paragraphs, and topics. This system resembles chunking, designed to sample various quality metrics similarly to a spontaneous assessment.

Explore Captivating Ranking Factors

Here are some points deemed interesting and significant for bloggers:

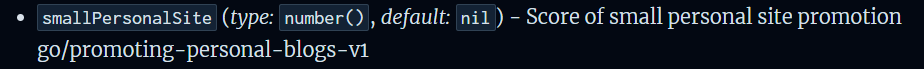

- A distinguishing attribute characterizes small personal sites/blogs, though its purpose remains uncertain.

- Google assigns a site Authority score.

- NavBoost heavily relies on click data, including metrics like the longest click from the SERPs and the last good click, which has been tracked over 13 months.

- Google monitors Chrome visits to analyze user behavior.

- Twiddlers are re-ranking algorithms that operate between major updates, influencing SERP rankings.

- Over-optimized anchor text, primarily when consistently used for links from third-party sites, seems to prompt a spam demotion for those links.

- Inadequate navigation and exact match domains may lead to lower rankings.

- Google stores at least the last 20 versions of web pages, requiring frequent updates to reset page status potentially. The extent of change needed for a page to be considered a new version remains unclear.

- Google tracks attributes like link font size and text weight, with larger links perceived more positively. Bolded text is interpreted differently and is also beneficial for accessibility.

- Google employs a keyword stuffing score.

- Google monitors domain expiration dates to identify expired domain abuse.

- Websites with videos on over 50% of their pages are classified as video sites. Requirements regarding indexing, placement within posts, or native uploading are unclear.

- YMYL content is assigned its own ranking score.

- A “gold standard” attribute appears to identify human-generated content, although the criteria for activation are ambiguous.

- AI Overviews are not referenced in the document.

- Internal links are not explicitly mentioned as an attribute.

- Travel, Covid, and politics have “whitelists” requiring approval for sharing. The scope of travel sites covered is uncertain, whether for general SERPs, Google’s “travel” section, or related widgets. Notably, travel is the sole non-YMYL niche addressed, possibly a remnant from Covid lockdowns with potential obsolescence.

Essential SEO Strategies For Optimizing Your Website

If SEO is important for your site, remove or block pages that aren’t relevant. Connect topics contextually to reinforce links, but first establish your target topic and ensure each page is optimized according to the guidelines at the end of this document.

Headings must be optimized around queries, and the paragraphs under these headings must answer those queries clearly and succinctly. Embeddings should be used on both a page-by-page and site-wide basis.

Clicks and impressions are aggregated and applied on a topic basis, so write content that can earn more impressions and clicks. According to the leaked documents, providing a good experience and consistently expanding your topic will lead to success.

Google gives the lowest storage priority of irregularly updated content and will not display it for Freshness. It is essential to update your content regularly by adding unique information, new images, and video content, aiming to score high on the “effort calculations” metric.

Maintaining high-quality content and frequent publishing is challenging but rewarding. Google applies site-level scores predicting site and page quality based on your content. Consistency is crucial as Google measures variances in many ways.

Impressions for the entire website are part of the Quality NSR data, so you should value impression growth as it indicates quality.

Entities are crucial, according to the leak. Salience scores for entities and top entity identification are mentioned.

Remove poorly performing pages. If user metrics are wrong, no links point to the page, and the page has had sufficient time to perform, eliminate it. Site-wide scores and scoring averages are significant, and removing weak links is as important as optimizing new content.

Have We Confirmed Seed Sites Or Overall Site Authority?

The variable “IsElectionAuthority” is a binary indicator to identify if a website has the election authority signal. This signal, also known as “seed sites,” plays a significant role in online authority and credibility. Its presence indicates a level of importance within the digital landscape, although its precise definition is not clearly established.

The term “seed sites” suggests a foundational role in distributing information or shaping online discourse, potentially serving as primary sources or authoritative hubs within specific domains. However, the exact criteria for this designation remain unclear.

One interpretation suggests that the election authority signal is reserved for websites deemed authoritative in either linkages or topical expertise. Link authorities are sites with a strong backlink profile, often viewed as reputable sources by search engines due to their connections with other trusted domains. Conversely, topic authorities are platforms recognized for their expertise within specific subject areas.

Although the exact criteria for becoming an election authority are uncertain, its inclusion among online signals highlights the complexity of assessing digital credibility and influence. Understanding the role and implications of such signals is crucial for researchers, policymakers, and digital stakeholders navigating online information dissemination and authority attribution.

Possible Utilization of Chrome Data in Ranking

We have demonstrated that uploading a PDF to a new domain and accessing its direct URL in Chrome leads to the PDF being indexed. Despite Google’s persistent claims that they do not utilize any Google Chrome data for organic search, both Matt Cutts in 2012 and John Mueller consistently echoed this stance over the years.

However, it appears that Google does track user interactions through the Chrome browser via uniqueChromeViews and chromeInTotal metrics. Additionally, there are allegations suggesting that the Chrome browser was primarily designed to gather more clickstream data.

Furthermore, Google has acknowledged collecting data from users even when they are in incognito mode for an extended period.

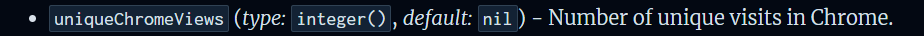

Crawling

- Trawler: Manages web crawling operations, overseeing the crawl queue and accurately gauging the frequency of page updates and changes.

Indexing

- Alexandria functions as the primary indexing system, providing the foundational structure for organizing and accessing data efficiently.

- SegIndexer operates by categorizing documents into distinct tiers within the index, facilitating streamlined retrieval and management based on hierarchical levels.

- TeraGoogle serves as a supplementary indexing system, specifically designed for the long-term storage of documents on disk. It complements Alexandria and SegIndexer by providing additional indexing capabilities tailored to accommodate documents stored over extended periods.

Rendering

- HtmlrenderWebkitHeadless: This component is responsible for rendering JavaScript pages. Despite its name referencing Webkit, it is likely that it utilizes Chromium instead, as indicated by documentation mentioning a transition from WebKit to Headless Chrome.

Processing

- LinkExtractor: Designed to extract links from web pages.

- WebMirror: Manages the process of canonicalization and duplication of web content, ensuring consistency and avoiding redundancy.

Ranking

- Mustang: Serves as the primary system for scoring, ranking, and ultimately serving web content.

- Ascorer: Serves as the primary algorithm responsible for the initial ranking of pages before any further re-ranking adjustments.

- NavBoost: Implements a re-ranking mechanism based on user click logs, thereby enhancing the relevance of search results.

- FreshnessTwiddler: Implements a re-ranking mechanism that prioritizes fresher content, ensuring that more recent documents are appropriately ranked.

- WebChooserScorer: Defines the feature names utilized in snippet scoring, contributing to the relevance and accuracy of search result snippets.

Serving

- Google Web Server (GWS): Acts as the server that interfaces with Google’s frontend, receiving data payloads to be displayed to users.

- SuperRoot: Functions as the central system of Google Search, facilitating communication with Google’s servers and managing the post-processing system responsible for re-ranking and presenting search results.

- SnippetBrain: Responsible for generating the snippets accompanying search results and providing users with brief content summaries.

- Glue: Integrates universal search results using data on user behavior, ensuring a cohesive and comprehensive search experience.

- Cookbook: Generates signals utilized in various processes, with some signal values dynamically created at runtime, enhancing the adaptability and effectiveness of Google’s search algorithms.

What Exactly Are Twiddlers?

Online resources on Twiddlers are notably scarce, necessitating a comprehensive explanation to enhance our understanding, particularly in the context of various Boost systems.

Twiddlers function as intricate re-ranking components after the primary Ascorer search algorithm. Similar to filters and actions in WordPress, they dynamically adjust the content presentation to users. They can modify a document’s information retrieval score or alter its ranking. Many live experiments and established systems within Google are orchestrated through Twiddlers, highlighting their pivotal role.

One noteworthy functionality of Twiddlers is their ability to impose category constraints, promoting diversity in search results by restricting the types of content displayed. For instance, an author might confine a Search Engine Results Page (SERP) to showcase only three blog posts, signaling when ranking becomes futile based on the given page format.

Understanding the evolution of algorithms, such as Panda, within Google’s ecosystem provides further insight. When Google indicates that a feature like Panda wasn’t initially integrated into the core algorithm, it implies its deployment as a Twiddler for re-ranking boost or demotion calculation, followed by its eventual incorporation into the primary scoring function. This analogy aligns with the conceptual difference between server-side and client-side rendering in web development.

In the documentation, functions appended with the “Boost” suffix operate within the Twiddler framework. Notable examples include:

- NavBoost

- QualityBoost

- RealTimeBoost

- WebImageBoost

with functionalities discernible from their names.

An internal document on Twiddlers reveals detailed insights that complement the observations made in this discourse. A comprehensive understanding of Twiddlers is indispensable for effectively navigating the complexities of Google’s ranking algorithms.

What Is NavBoost?

NavBoost is a crucial factor in Google’s ranking system, influencing the order of website appearances in search results. It focuses on navigation queries, which are intended to lead users to specific websites like “youtube.com” or “facebook.com.” This system relies significantly on user clicks, as Google records data on clicked results from navigation queries to improve future search results.

However, NavBoost is not entirely dependent on clicks. It also considers other aspects of websites, such as mobile compatibility and navigational clarity. Although NavBoost does not directly measure engagement, it uses clicks as an indicator of engagement to identify searches that produce valuable websites.

Insights That Could Impact Your SEO Strategy

Before we proceed, it’s essential to clarify the aim here: it’s about introducing fresh ideas to the SEO scene rather than prescribing exact solutions for specific needs. If tailored advice is what you’re after, consider hiring iPullRank. Otherwise, there’s plenty here for you to glean insights from and shape your strategies.

Understanding Panda

When Panda first launched, there was much uncertainty. Was machine learning behind it? Did it consider user signals? Why were updates or refreshes needed to recover from its impact? And why did traffic vary for specific subdirectories?

Panda clarified these questions, challenging popular beliefs. It didn’t heavily rely on machine learning but established a scoring modifier based on various signals related to user behavior and external links. This modifier could be applied at different levels—domain, subdomain, or subdirectory.

Reference queries, used by Panda for refreshes, were essentially derived from NavBoost. This means Panda’s refreshes operated similarly to Core Web Vitals calculations, with updates to the link graph not necessarily processed in real-time.

To maintain or improve rankings post-Panda, focus on generating more meaningful clicks across a broader range of queries and diversifying your link portfolio.

Authors Take Center Stage

Despite the nebulous nature of E-A-T (Expertise, Authoritativeness, Trustworthiness), Google explicitly records authors associated with documents. They also assess whether entities mentioned on a page are its authors. This, coupled with detailed entity mapping and embeddings, suggests a comprehensive evaluation of authors by Google.

Algorithmic Demotions

The documentation hints at several algorithmic demotions, including:

- Anchor Mismatch

- SERP Demotion

- Nav Demotion

- Exact Match Domains Demotion

- Product Review Demotion

- Location Demotions

- Porn Demotions

- Other Link Demotions

Understanding these demotions can inform your strategy, emphasizing the importance of producing high-quality content and optimizing user experience.

Content Length Matters

Google uses a token system to evaluate content originality by comparing the number of tokens to the total words on a page. This system includes several components: numTokens, which sets the maximum word limit processed per page; leadingtext, which stores the initial text; and word count. It’s essential to prioritize key and unique information early on the page for accurate interpretation by Google.

The “OriginalContentScore” specifically applies to short-form content, highlighting that quality isn’t solely dependent on length. Additionally, the spamtokensContentScore affects content evaluation. For longer content, it’s crucial to position essential information near the beginning.

Quality Raters Might Count

Google employs a team of “quality raters” to evaluate websites, guided by publicly available quality rater guidelines. While the exact impact of these raters on the search algorithm is uncertain, leaked Google search documents provide some insights.

Certain elements suggest that data collected by quality raters, such as furballUrl and raterCanUnderstandTopic attributes in relevance ratings documents, are retained. Additionally, references to “humanRatings” and terms like “ewok” appear. It is unclear whether this information only contributes to training datasets or directly influences the algorithm.

Thus, the opinion of a quality rater assessing your website could likely influence its rankings. Reviewing Google’s Search Quality Rater Guidelines is advisable to ensure your website meets the required standards.

Backlinks Remain Crucial

Google has consistently claimed that the significance of links has diminished over time. However, independent tests consistently demonstrate otherwise, challenging this assertion.

Leaked documents fail to provide evidence supporting the notion that links have become less important. On the contrary, the presence of the “linkInfo” attribute emphasizes the significance of links to the model.

Additionally, backlinks function as a modifier across various ranking factors and parameters. The heightened importance attributed to PageRank for home pages and the siteAuthority metric further underscores the ongoing relevance of backlinks.

This is evident from the frequent mention of PageRank in the documents. The sophisticated nature of Google’s “link graph” enables its engineers to assess the quality of backlinks effectively, reaffirming their importance within the algorithm.

The Connection Between Traffic And Value

This information comes from a reliable source linked to leaked Google search documents. Google categorizes links into three types: low quality, medium quality, and high quality, based on source type and link weight. Google uses click data to determine each link’s category.

Links from pages without traffic are considered low quality and have minimal impact on website rankings, potentially being ignored by Google. In contrast, links from high-traffic pages are regarded as high quality and positively affect site ranking.

Google determines page clicks using the totalClicks attribute. Links from top-tier pages are more influential due to their higher quality designation.

Measuring Backlink Velocity

Various advanced metrics are gathered to monitor backlink velocity, the rate at which a website gains or loses backlinks over a specific period. Google uses metrics like “PhraseAnchorSpamDays” to evaluate the speed and volume of new links, helping distinguish between natural link growth and attempts to manipulate rankings. This understanding illuminates how Google may impose link penalties.

Tracking Your Modifications

Google’s index works similarly to the Wayback Machine, storing various versions of web pages over time. According to Google’s documentation, the search engine indefinitely records all indexed content.

The CrawlerChangeRateUrlHistory system is crucial in this process. As a result, redirecting a page to another doesn’t necessarily transfer its link equity, as Google understands the context and relevance of the original page.

However, the system mainly focuses on a given page’s last 20 versions (urlHistory). This means that for significant changes to take effect, it might be necessary to update and re-index the page more than 20 times.

The Vital Importance of User Signals

The SparkToro post shared an email from Google VP Alexander Grushetsky regarding the Navboost email. Additionally, they provided a resume excerpt highlighting the significance of Navboost as one of Google’s potent ranking signals. This underscores the critical importance of user intent in Google’s search algorithm.

When users consistently bypass your website to select a competitor’s offering, Google interprets this as the competitor better satisfying the query. Consequently, the algorithm is primed to elevate the competitor’s website in organic search results, irrespective of your content quality or backlink strength.

Google’s inclination to reward websites based on user preference implies that user intent and the NavBoost system stand as pivotal ranking factors in Google’s algorithm, eclipsing even the influence of backlinks.

While relevant backlinks may facilitate reaching page 1, sustaining that position necessitates positive user signals.

The Importance of Keeping Content Fresh

Google places importance on both new content and consistently updated older content. This document provides an overview of Google’s different measures for evaluating the freshness of content.

The main metrics consist of:

- bylineDate: The date shown on the page.

- syntacticDate: The date taken from the URL or title.

- semanticDate: The approximated date inferred from the content of the page.

Google uses these metrics to ensure content freshness reliably. Furthermore, there are factors such as lastSignificantUpdate and contentage, underscoring the significance of regularly updating structured data, page titles, content, and sitemaps to uphold freshness.

Information Regarding Domain Registration

Google actively collects and maintains current domain registration information to determine domain ownership and registration locations. Domain names are considered digital assets, and Google utilizes its domain registrar status to monitor domain ownership records. While it is unclear if Google uses this data to affect search results, it is confirmed that they collect such information. Furthermore, in the March 2024 update, Google addressed concerns regarding the misuse of expired domains.

Personal Websites Receiving Increased Attention

This could provoke strong reactions in many individuals. We comprehend the reasons behind such reactions. A website is denoted by a particular characteristic as a “small personal site.” The question arises: What is the rationale behind this designation? The term “Score of small personal site promotion” is visible, suggesting a potential function in promoting small personal sites.

However, the lack of supplementary details regarding the definition of a small personal site is notable. Furthermore, there is no explicit indication of its utilization within the algorithm. Speculation aside, the exact purpose remains uncertain.

Other Insights

- Documents have a token limit, emphasizing the importance of prioritizing content.

- Short content is scored for originality, with a separate score for keyword stuffing.

- Page titles are still evaluated against queries, indicating the importance of keyword placement.

- No specific metrics track metadata character counts.

- Dates play a crucial role in determining content freshness.

- Domain registration information influences content indexing.

- Video-focused sites and YMYL (Your Money Your Life) content are assessed differently.

- Site embeddings help measure content relevance.

- Google may prioritize or intentionally demote small personal sites.

These insights provide valuable guidance for refining SEO strategies in light of Google’s evolving algorithms and priorities.

Also Read: Unlock 2024’s Top SEO Trends: Start Implementing Today for Maximum Visibility!

Key Takeaways From Google API Information For Bloggers

- Prioritize User Needs: Bloggers should create genuinely helpful and engaging content for their audience. This involves delivering high-quality information and insights that address the needs and interests of users rather than simply optimizing for search engines or chasing trending keywords.

- Enhance User Experience: Websites must be user-friendly. This includes ensuring easy navigation, fast loading times, and a smooth experience for visitors. A well-designed website helps retain users and encourages them to explore more content.

- Develop a Strong Brand: Building a loyal following requires consistently providing valuable content and establishing trust with the audience. Bloggers should diversify their traffic sources, instead of relying solely on Google search, by engaging with their audience through various platforms and creating a recognizable and trustworthy brand.

- Acquire Quality Links: Securing high-quality backlinks from relevant and authoritative websites can significantly improve a blog’s credibility and search ranking. Focus on natural link-building practices and avoid spammy or manipulative tactics that can harm the site’s reputation.

- Avoid SEO Myths: Many traditional SEO practices, such as keyword stuffing and rigid adherence to specific character counts for titles and descriptions, are no longer as effective as once thought. Bloggers should prioritize creating valuable content over meeting arbitrary SEO criteria. The specifics of E-A-T (Expertise, Authoritativeness, Trustworthiness) are also less critical than producing genuinely helpful content.

- Test and Analyze: Continuous experimentation and analysis are essential for understanding the best strategies for a blog. Bloggers should regularly test different approaches, monitor their performance, and adjust their tactics based on data. This iterative process helps refine content and improve overall site performance.

- Maintain Content Freshness: Keeping content up-to-date is vital. Information should be regularly reviewed and updated to reflect the latest developments and insights. Fresh content improves user experience and signals to search engines that the site is active and relevant.

- Present Main Content Promptly: Bloggers should avoid lengthy introductions and quickly reach the main point. Users appreciate concise and direct content that delivers value without unnecessary preamble.

- Focus on Your Niche: Establishing authority within a specific niche involves creating high-quality content that caters to the unique interests and needs of the target audience. Blazers can build a reputation as experts and attract a dedicated following by specializing in a particular area.

- Embrace Uncertainty: The Google ranking algorithm is complex and constantly evolving. Instead of predicting and reacting to every change, bloggers should focus on consistently creating excellent content and strengthening their brand. This approach helps achieve long-term SEO success regardless of algorithmic fluctuations.

The Google Data Leak: Unveiling The Facts

Consider the following aspects regarding the leaked data:

- Context of the Leaked Information: The specific context of the leaked data remains unidentified. Determining whether this information is related to Google Search or serves other purposes within the company is crucial. Without knowing the context, it is difficult to ascertain the significance and impact of the data.

- Purpose of the Data: Understanding the data’s intended use is essential. Was this information employed to generate actual user search results, or was it intended for internal data management or manipulation? Clarifying the purpose will help assess the relevance and potential consequences of the leak.

- Confirmation from Ex-Googlers: Former Google employees have not explicitly verified that the leaked data is specific to Google Search. Their statements only confirm that the data appears to originate from Google. This distinction is essential, as it leaves room for the possibility that the data could be related to other Google services or projects.

- Avoiding Confirmation Bias: It is essential to remain objective and avoid seeking validation for pre-existing beliefs or assumptions. Confirmation bias can lead to selectively interpreting information in a way that supports one’s views, potentially skewing the understanding of the leaked data. An impartial analysis is necessary to draw accurate conclusions.

- Evidence of External-Facing API: Evidence suggests that the leaked data may be related to an external-facing API intended for building a document warehouse. This indicates that the data could be part of a more extensive system designed for organizing and managing documents, rather than being directly tied to search engine functionalities.

Considering these aspects, a more precise understanding of the leaked data and its implications can be achieved.

Opinions On “Leaked” Documents

Ryan Jones, an SEO expert with a strong background in computer science, shared his thoughts on the alleged data leak.

Ryan tweeted:

“We don’t know if this is intended for production or testing. It is likely for testing potential changes.

We don’t know what is used for the web or other verticals. Some elements might be exclusive to Google Home or News.

We don’t know what is an input to a machine learning algorithm and what is used for training. Clicks likely aren’t a direct input but help train a model to predict clickability, outside of trending boosts.

It is probable that some fields only apply to training data sets, not all sites.

I am not claiming Google is truthful, but we should analyze this leak objectively and without bias.”

@DavidGQuaid tweeted:

“We also don’t know if this is for Google Search or Google Cloud document retrieval.

APIs seem to be selectively used, which is not how I expect the algorithm to function. An engineer might skip all those quality checks—this appears to be a setup for building a content warehouse app for an enterprise knowledge base.”

Other industry experts advise caution in interpreting the leaked documents. They note that Google might use the information for testing or apply it to specific search verticals rather than as active ranking signals. Additionally, there are uncertainties about the weight of these signals compared to other ranking factors, as the leak lacks full context and algorithm details.

Is The Data Allegedly Leaked Connected To Google Search?

Google has acknowledged the authenticity of certain leaked internal documents related to Google Search. However, the available evidence does not definitively establish that the leaked data originates from Google Search. There is uncertainty about the intended use of this data, with indications suggesting it serves as an external-facing API for document warehousing rather than being directly related to Google Search’s website ranking processes.

The leaked documents, which detail the data collected by Google and its potential incorporation into search ranking algorithms, have sparked speculation and analysis within the SEO community. The ongoing discussion revolves around whether this leak will prompt Google to tighten its already restricted communication channels.

The existence of the leaked documents was first reported earlier this week by Rand Fishkin and Mike King. They claim the materials offer a look under the hood of Google’s closely guarded search engine, suggesting the company tracks data like user clicks and Chrome browsing activity – signals that Google representatives have previously downplayed as ranking factors.

However, Google has cautioned against jumping to conclusions based solely on the leaked files. Many in the SEO community have made potentially inaccurate assumptions about how the leaked data points fit into Google’s systems. Google advises against this.

A representative from Google conveyed in a statement to The Verge:

“We would caution against making inaccurate assumptions about Search based on out-of-context, outdated, or incomplete information.”

Google’s Public Communication May Have A Significant Impact

The Google Search Leak has compelled Google to publicly address the situation, deviating from its usual stance of discretion. Given the ongoing speculation and debate surrounding the leaked information, Google might opt against offering further insights into its search engine and ranking methodologies. Throughout its history, Google has maintained a delicate balance, offering guidance to SEO professionals and publishers while safeguarding its algorithms from manipulation and misuse.

Looking Ahead

The Google Search Leak documents offer insights into Google’s search algorithms, yet there are significant gaps concerning the specifics of data collection and weighting.

The prevailing perspective advocates utilizing the leaked information as a starting point for further investigation and experimentation, rather than unquestionably accepting it as definitive insight into search ranking determinants.

While open and collaborative discourse is valued within the SEO community, it’s crucial to complement knowledge-sharing with thorough testing, a critical mindset, and acknowledgment of the constraints inherent in any singular data source, including internal Google materials.

FAQs

What does the Google search leak tell us?

The leak confirms that Google uses various factors for ranking, including content quality, backlinks, user clicks, and even data from Chrome users. It also sheds light on how Google stores and processes information for search results.

Does the Google search leak reveal the entire algorithm?

No, the leak doesn’t provide the specific formulas or scoring systems used by Google’s ranking algorithm. The leaked documents focus more on data storage and how different elements are used.

How will this leak affect SEO?

While the leak confirms some SEO best practices, it likely won’t lead to significant breakthroughs in manipulating search rankings. Google’s algorithm constantly evolves, and the leaked documents may not reflect the latest updates.

Is this a security risk for Google?

Although the leak of internal documents is certainly not ideal, it likely doesn’t pose a major security threat. The information revealed focuses more on general functionalities rather than sensitive data.