It’s critical to put your best (digital) foot forward when it comes to your website. This could imply hiding some pages from Googlebot as it crawls your site. Robots.txt files, fortunately, allow you to do just that. We’ll go through the relevance of robots.txt files and how to create them with free robots txt generator tools, in the sections below.

What Is a Robots.txt File and Why Do I Need One?

Let’s talk about what a robots.txt file is and why it’s necessary before we get into the incredibly useful (and free!) robots.txt generator tools you should check out.

There may be pages on your website that you don’t want or need Googlebot to crawl. A robots.txt file tells Google which pages and files on your website to crawl and which to ignore. Consider it a how-to guide for Googlebot to help you save time.

Here’s how it works

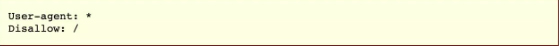

A robot wants to crawl a website URL such as http://www.coolwebsite.com/welcome.html. First, it scans for http://www.coolwebsite.com/robots.txt and finds:

The disallow section informs search bots to not crawl some web pages or elements of the website.

What is the significance of a Robots.txt file?

A robots.txt file is useful for a variety of SEO purposes. For starters, it enables Google to quickly and clearly determine which pages on your site are more significant than others.

Robots.txt files can be used to prevent aspects of a website, such as audio files, from showing up in search results. Note that while a robots.txt file should not be used to conceal pages from Google, it can be used to control crawler traffic.

According to Google’s crawl budget guidance, you don’t want Google’s crawler to overwhelm your server or squander crawl money crawling unimportant or similar pages on your site.

What Is a Robots.txt File and How Do I Make One?

For Google, robots.txt files must be formatted in a specified way. A single robots.txt file is allowed on every given website. The first thing to remember is that your domain’s robots.txt file must be placed in the root directory.

For detailed instructions on manually creating robots.txt files, go to Google Search Central. We’ve compiled a list of the top 10 robots.txt generator tools that you may use for free!

10 Free Robots.txt Generator Tools:

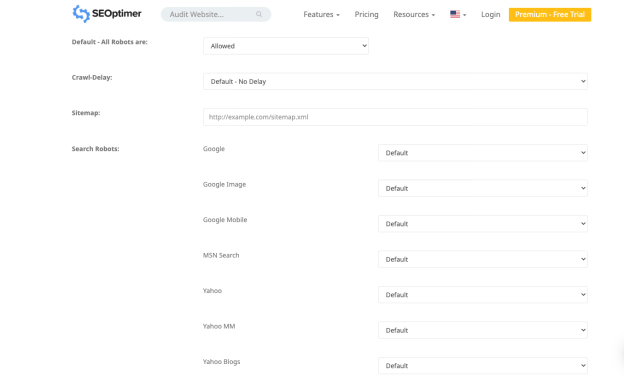

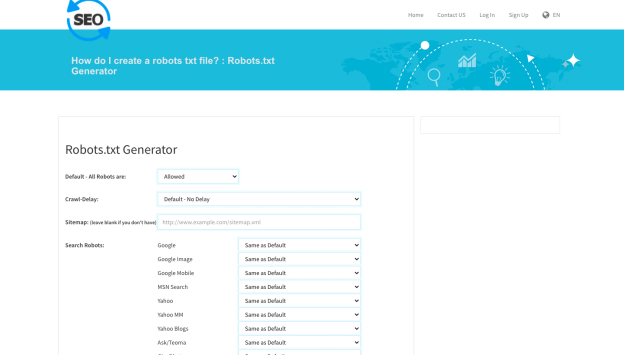

1. SEO Optimer

The application from Seo Optimer allows you to build a robots.txt file for free. The tool offers a strong interface to create a robots.txt file for free of cost. You can choose a crawl-delay period and which bots are permitted or prohibited from crawling your site.

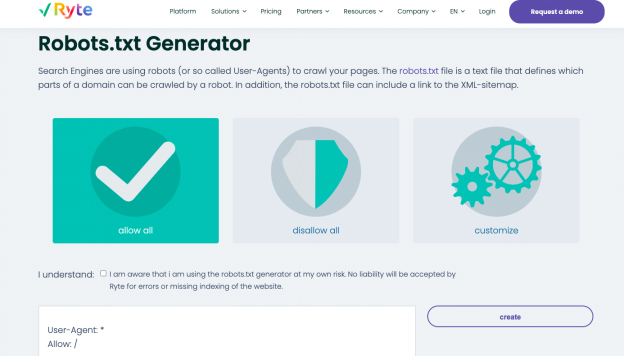

2. Ryte

Allow all, disallow all, and customize are the three options for generating a robots.txt file in Ryte’s free generator. You can choose which bots you wish to influence with the customize option, containing step-by-step instructions.

Ryte helps you to conduct SEO audits to identify your website’s content, technical and structural errors. Start analyzing with a single click or schedule frequent crawls to automate date streams. You can customize crawl with more than 15 configurations or use robust API to integrate data directly in your regular workflow. With the tool, you get all the required information to boost your website’s UX.

You can easily create intriguing content with Ryte’s keyword recommendations that help your website to display on the top of SERPs. Once you understand the keyword recommendations, you can modify your web content with structured data and schema.org tags. You can export completed text to HTML and then copy and paste in your CMS.

3. Better Robots.txt (WordPress)

The Better Robots.txt WordPress plugin improves the SEO and loading speed of your website. It can secure your data and content against harmful bots and is available in seven languages. Better Robots.txt plugin works with Yoast SEO plugin and other sitemap generators. The plugin can detect if you currently use Yoast SEO or if any other sitemaps are available on your website. To add your sitemap, you only need to copy-paste the sitemap URL. Then, Better Robots.txt will add it to your Robots.txt file.

Better Robots.txt plugin helps you in blocking the trending bad bots from crawling and affecting your data. The plugin protects your WordPress website against scrapers and spiders. It also provides upgrades and time with a more comprehensive list of bad bots blocked by the tool.

4. Virtual Robots.txt (WordPress)

The WordPress plugin Virtual Robots.txt is an easy and automated solution to build and manage a robots.txt file for your website. You only need to upload and activate the plugin before making the most of it.

The Virtual Robots.txt plugin gives access to the WordPress elements by default which good boots, like Google, need to access. While the other WordPress parts remain blocked. When the plugin detects a previous XML sitemap file, a reference to it gets automatically added to robots.txt file.

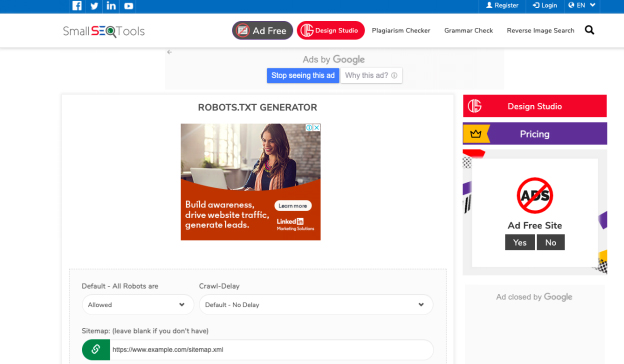

Another simple tool for creating a robot.txt file is the free generator of Small SEO Tools’ free generator. It makes use of drop-down menus for each bot’s preferences. For each bot, you can choose whether it is allowed or not.

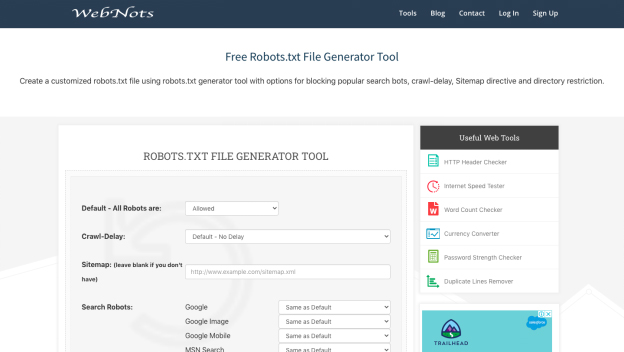

6. Web Nots

Because of its minimalist appearance, Web Nots’ robots.txt generation tool is similar to Small SEO Tools’ generator. It also includes drop-down menus and a section for restricted directories. When you’re finished, you can download the robots.txt file.

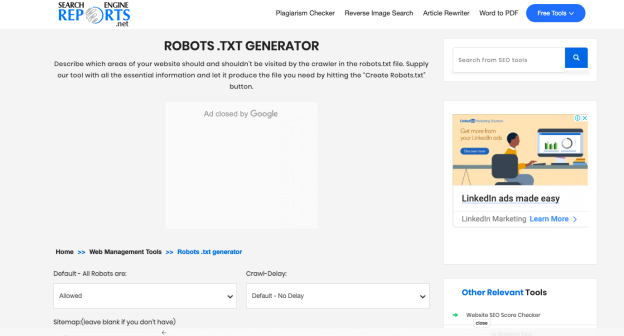

The generator in Search Engine Reports provides parts for your site map and any restricted directories. This free application is a wonderful way to create a robots.txt file quickly.

With Search Engine Reports, you check a piece of content containing 2000 words at a time. You can paste the content into the box and click on the submit button. Its integration with Dropbox helps you to upload content from this platform directly.

You can also upload the files stored in your system by clicking on the “Upload file” option by Search Engine Reports. You can add a URL to the box with the online plagiarism checker, and the software runs detailed operations.

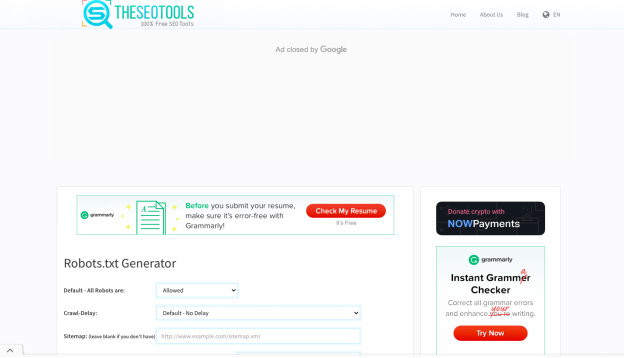

The free robots.txt generator from SEO Tools is a simple and quick way to create a robots.txt file for your website. If you like, you can define a crawl-delay and enter your site map. When you’ve completed selecting the options you want, click “Create and Save as Robots.txt.”

9. SEO To Checker

Another useful tool for creating a robots.txt file is SEO To Checker’s robot.txt generator. You can adjust all of the search robots’ options and add your site map.

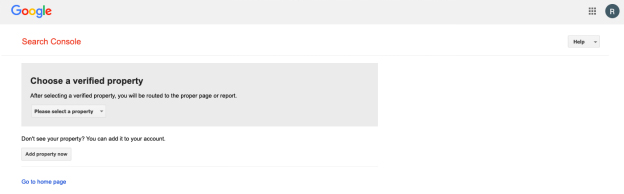

10. Google Search Console Robots.txt Tester

You can utilize Google Search Console’s robots.txt tester once you’ve created a robots.txt file. To determine if your URL is properly constructed to prohibit Googlebot from certain sections you want to hide, submit it to the tester tool.

Summing it up!

The tools listed above make creating a robots.txt file simple and quick. A healthy, well-performing website, on the other hand, goes beyond robots.txt. Improving technical SEO is critical for giving your website the visibility it needs and the SEO success it deserves.